Containers and Kubernetes - the Keynote

@tekgrrl is presenting in her keynote Kubernetes. You can read all about it at https://kubernetes.io .

Interestingly, being asked about security of containers, she commented that this still very much work in progress. Any multi-tenant usage might not be a good idea for applications that require security guarantees.

Yelp’s Microservices Story

Scott spoke to us about the difficulties yelp faced moving their transaction platform to a microservices architecture.

Problems they faced:

- increase in API complexity

- coupling between individual services increased

- interactions between all services got very difficult to debug

- the whole thing got slower

Decouple all the things

How do you decouple shared concepts in a maintainable way across multiple services? If you ever want to refactor those, how would you do that?

Yelp’s answer to that was to move to a API description language called SWAGGER.

This worked as a documentation system for itself, but requires upfront a gigantic very detailed specification document.

After all the hard work, they’ve gained a pretty API with a web view that’s self-documenting.

- Lessons

- interfaces should be intentional

- interfaces need to be explicit

- automate everything, especially the repetitive mechanical stuff

- logging is everything - use logstash and be happy

- they’ve built an alert tool on top of logstash called elastalert https://github.com/yelp/elastalert

- General Lessons

- Measure everything

- be explicit

- know your business and build based on that

- automate everything

Summary is available as yelp/service-principles at https://github.com/Yelp/service-principles

DumbDev

Programmers aren’t good at remembering. Modern research shows that humans in general aren’t able to hold more than 6 to 7 facts in short term memory.

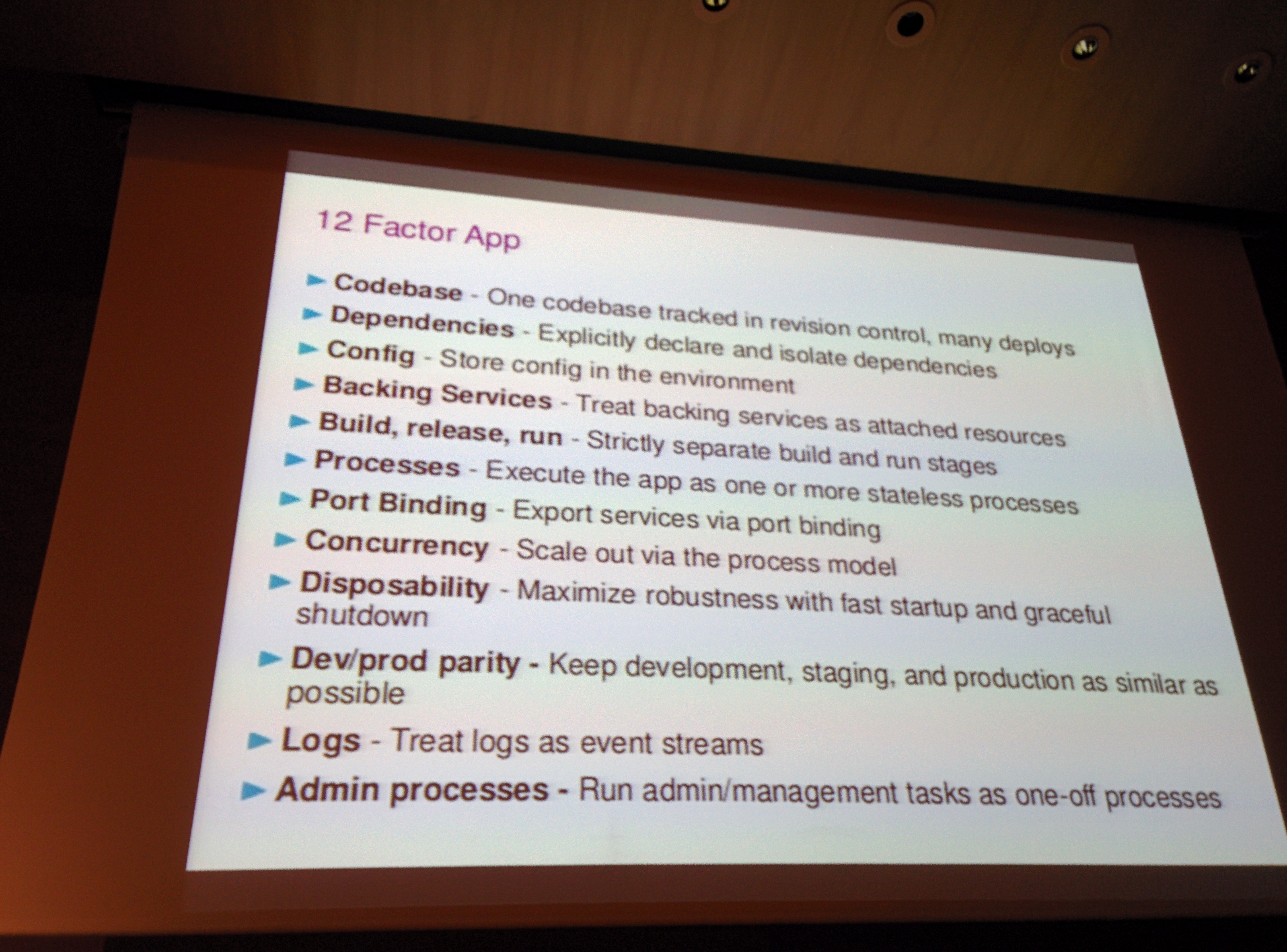

For example, try to remember all the 12 rules from 12 factor app in the next minute.

Now, please try to repeat them without looking at the list.

Research has shown that you should be able to repeat about 4-7 facts of the twelve, don’t worry if it’s more or less.

This illustrates very nicely that we need a framework to ensure that any problem topic actually fits into our head.

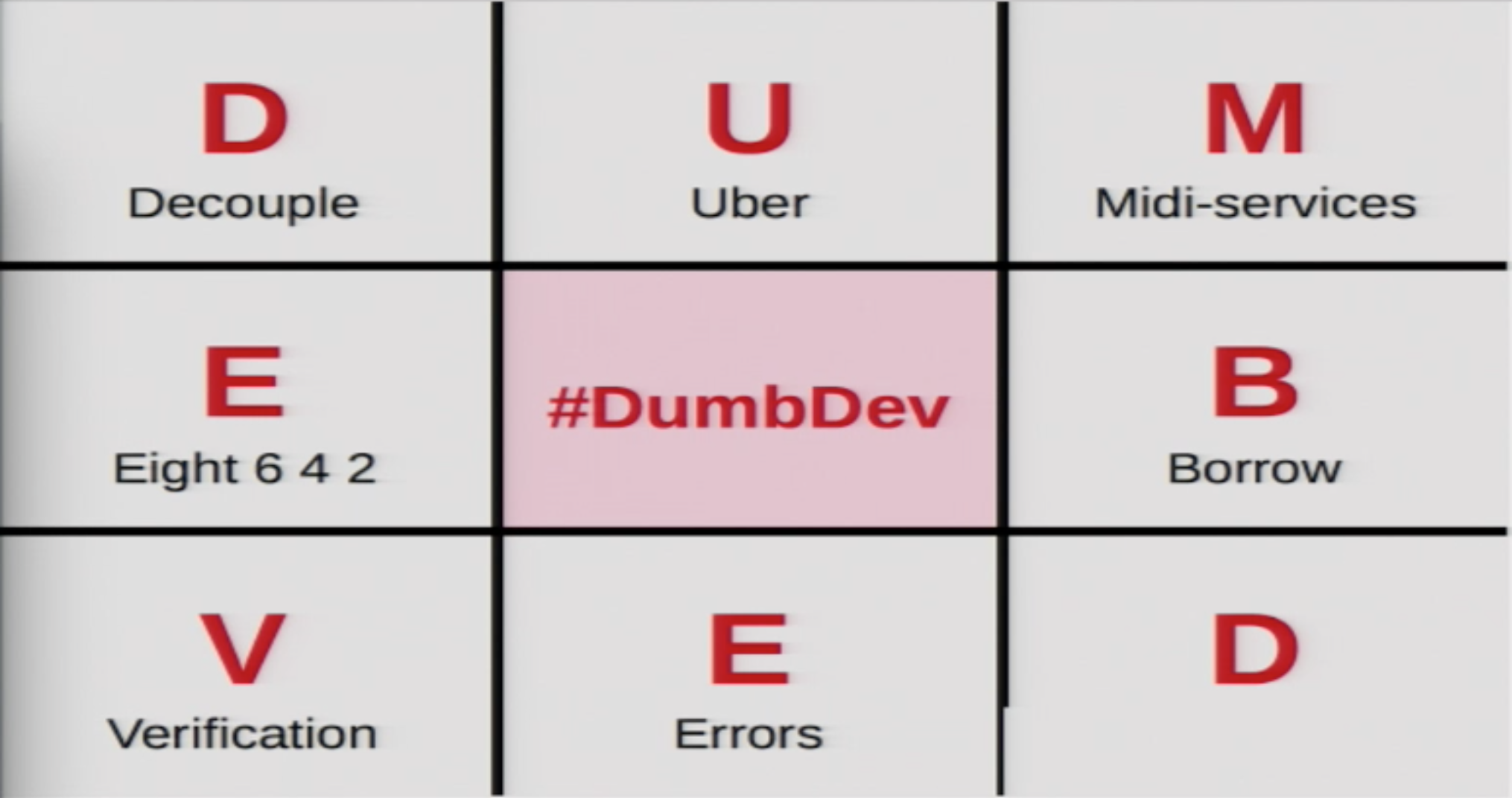

Rob Collins then introduced his idea to utilize a Noughts and Crosses (Tic-tac-toe) board,

which any concept, idea, proposal or diagram must fit into.

To explain this further he then used the concept to visualize itself; a concept inception.

You can watch the whole talk, here:

https://archive.org/details/EuroPython_2015_VklpGvbz

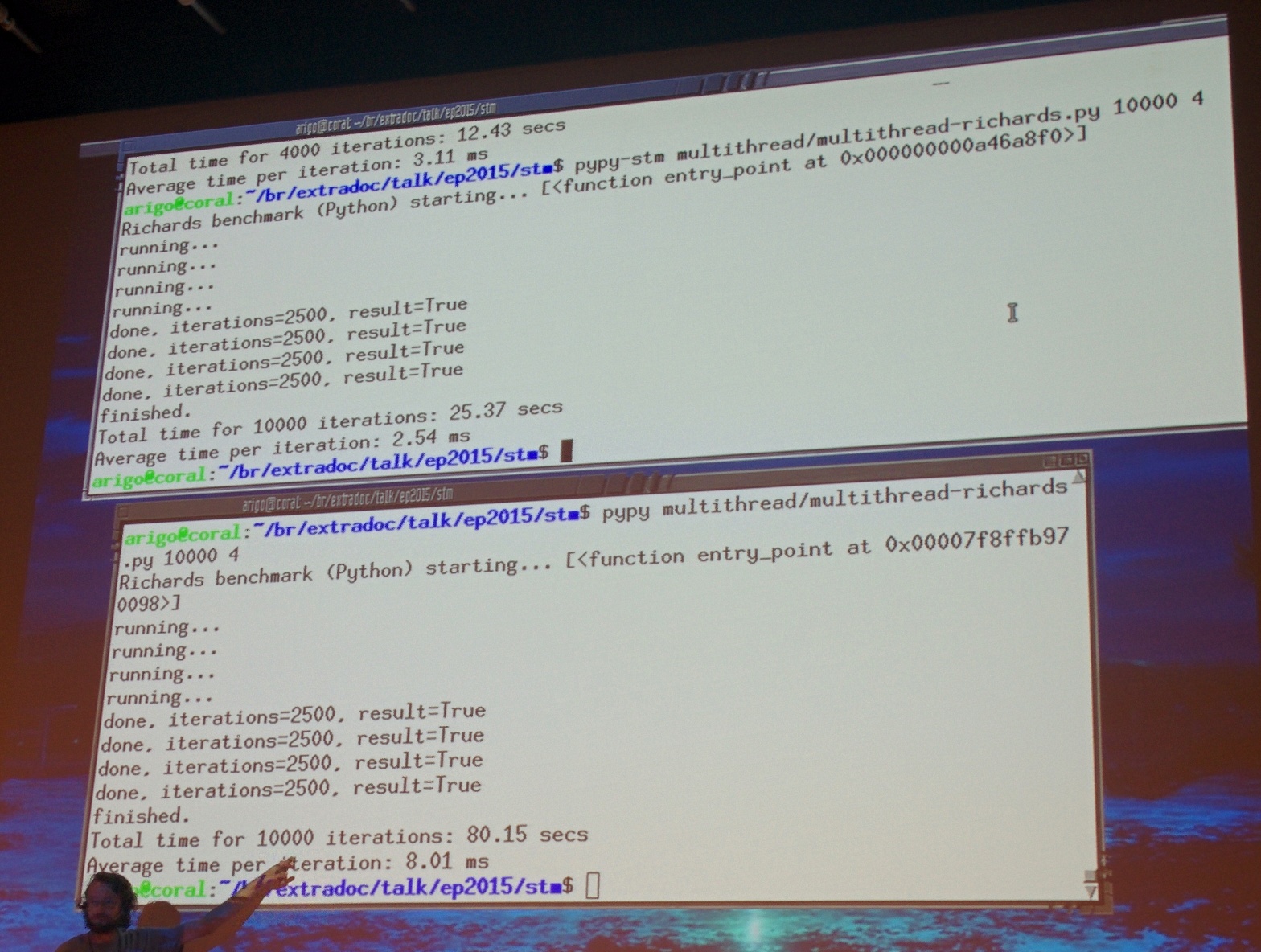

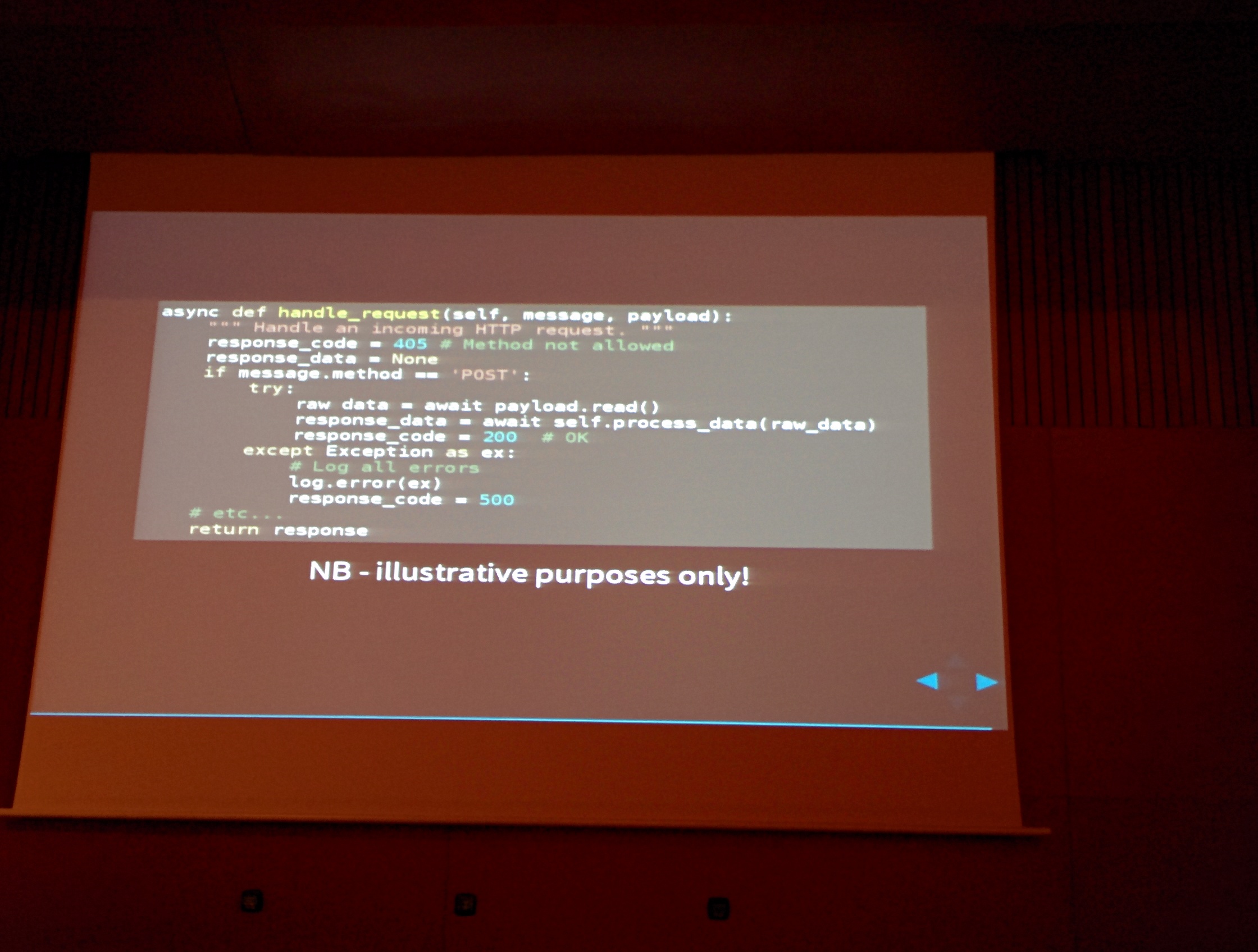

Parallelism Shootout - threads vs. multiprocesses vs. asyncio

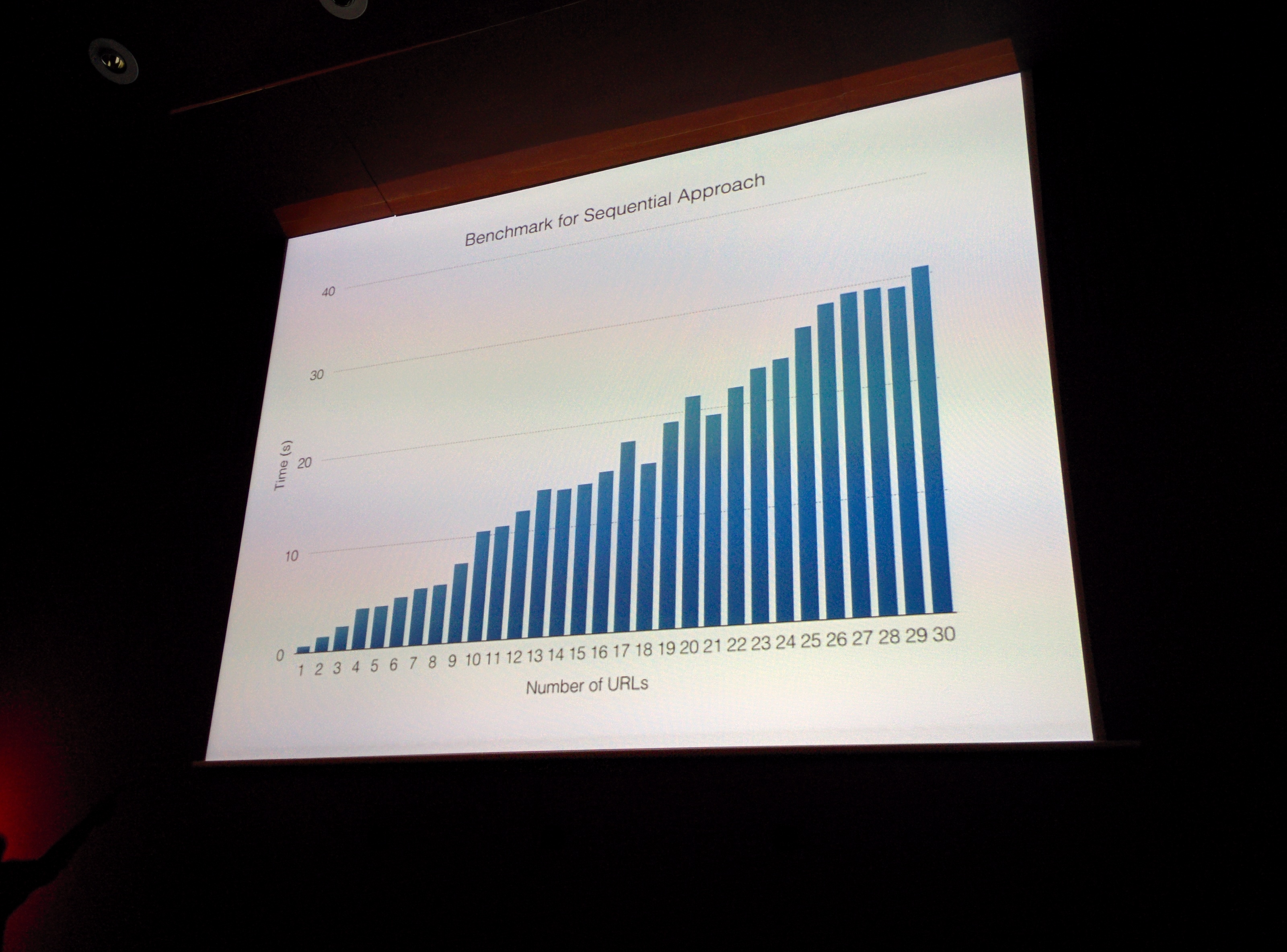

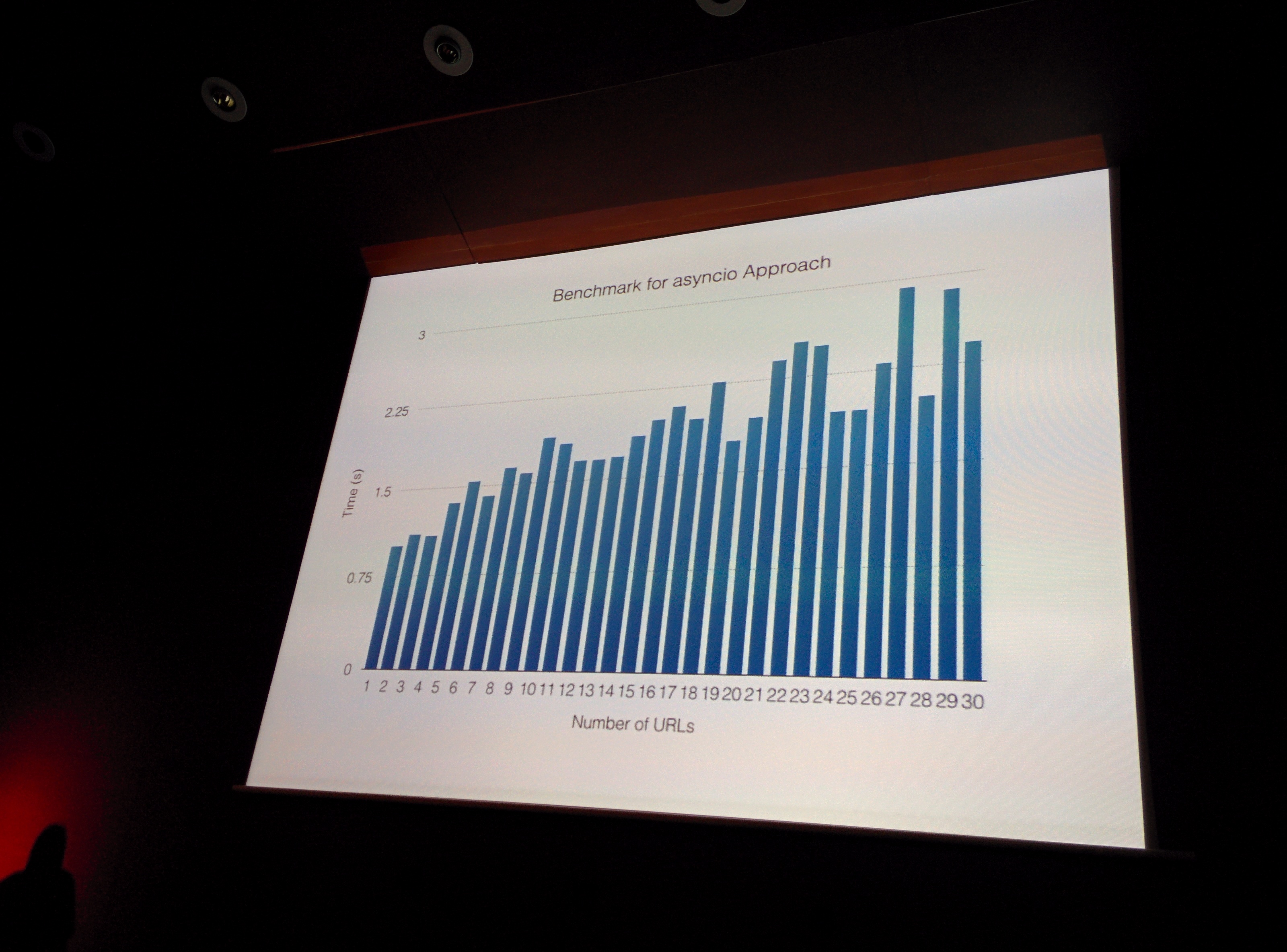

Shahriar Tajbakhsh benchmarked different parallelism approaches for I/O bound applications. His benchmark application was download 30 websites.

To compare all the parallel approaches, first lets look at the sequential timing.

The sequential processing time increases as expected in this benchmark.

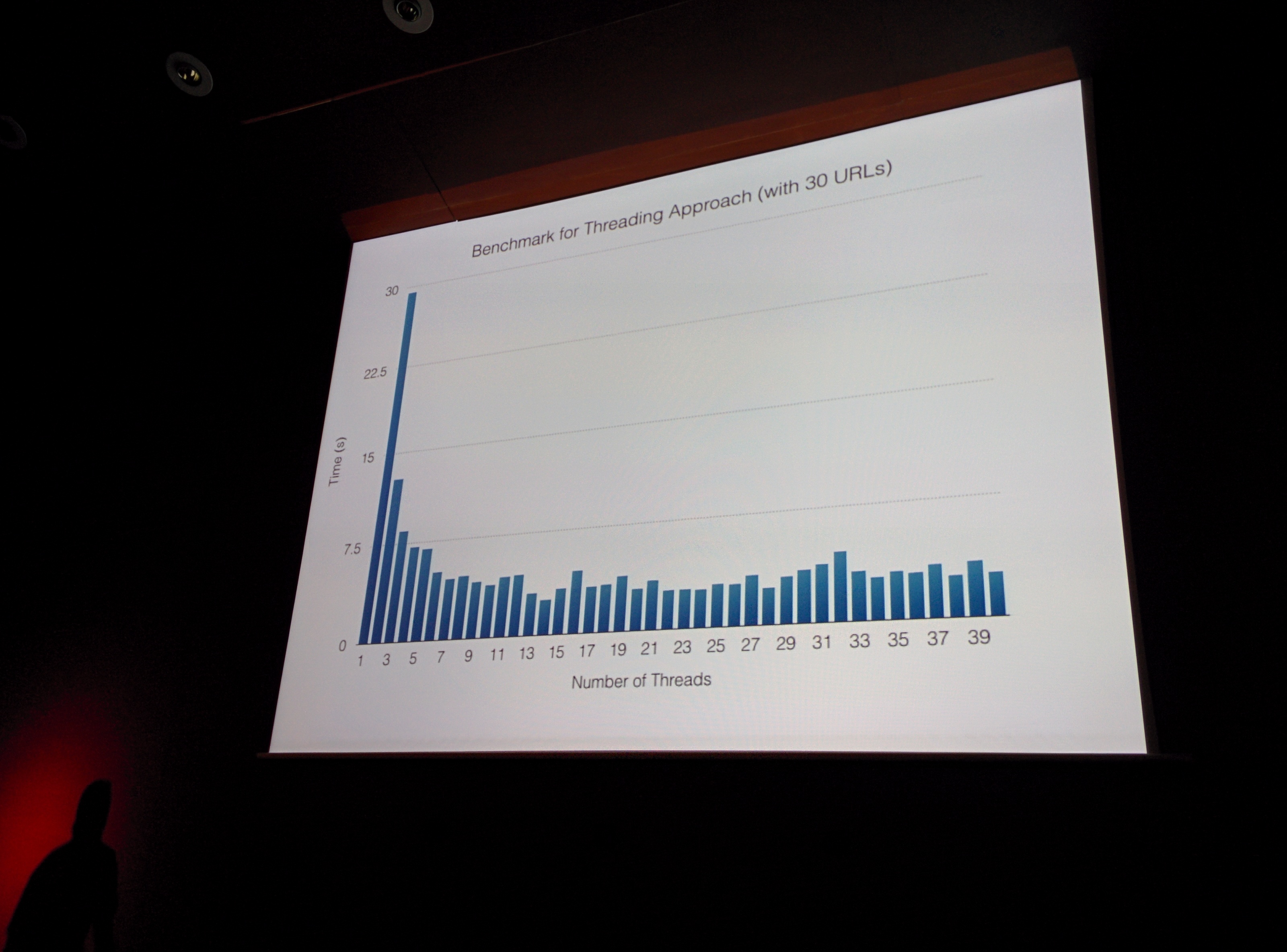

No surprises in the threaded version either, there is a certain amount of setup time

to get the threading started.

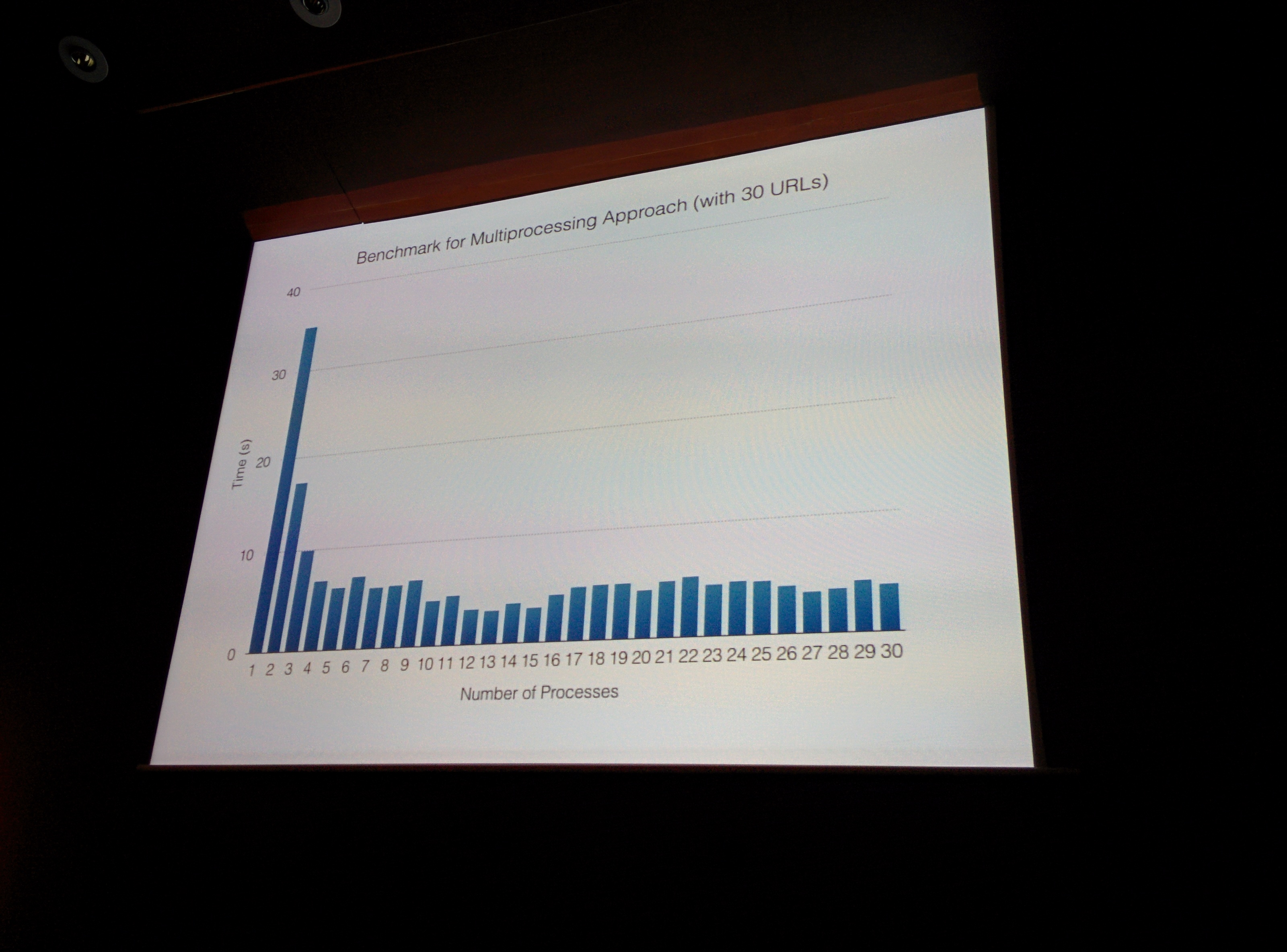

Multiprocessing has similar overhead and similar results.

We can clearly see a winner here, AsyncIO is significantly

faster than any of the other approaches.

The sourcecode for the benchmarks can be found at:

https://github.com/s16h?tab=repositories

when he publishes them.

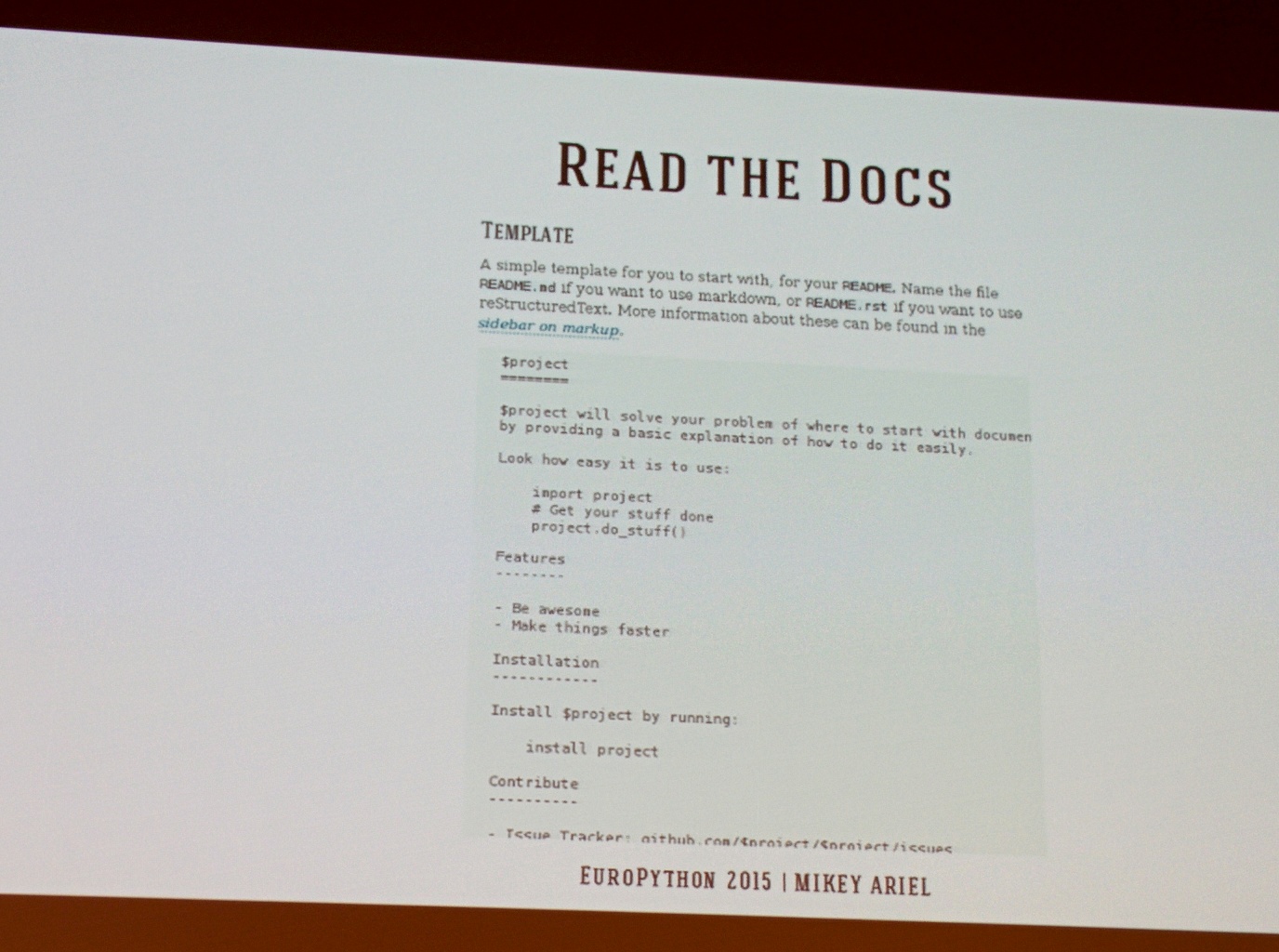

FOSS Docs by Mikey Ariel

Mikey is senior technical writer at Red Hat, talking about open-source projects and

why their documentation is so essential.

Documentation helps to:

— build a unified and intuitive user experience

— have portable and adoptable workflows

— create a scalable and adaptable project

How should one keep up with documentation? Build a tighter integration with the developers on the project and make documentation part of the testing cycle: DevOps for Docs.

Bad documentation is worse than no documentation, always ask: Who are my readers and write for their needs.

As a simple suggestion on where to start with documentation, she suggested a simple markdown based readme file in the following format: